Hello World

I'm Ariel

I'm an entrepreneur, software engineer, and policy geek dedicated to building the future of AI.

LinkedIn / Twitter / Email

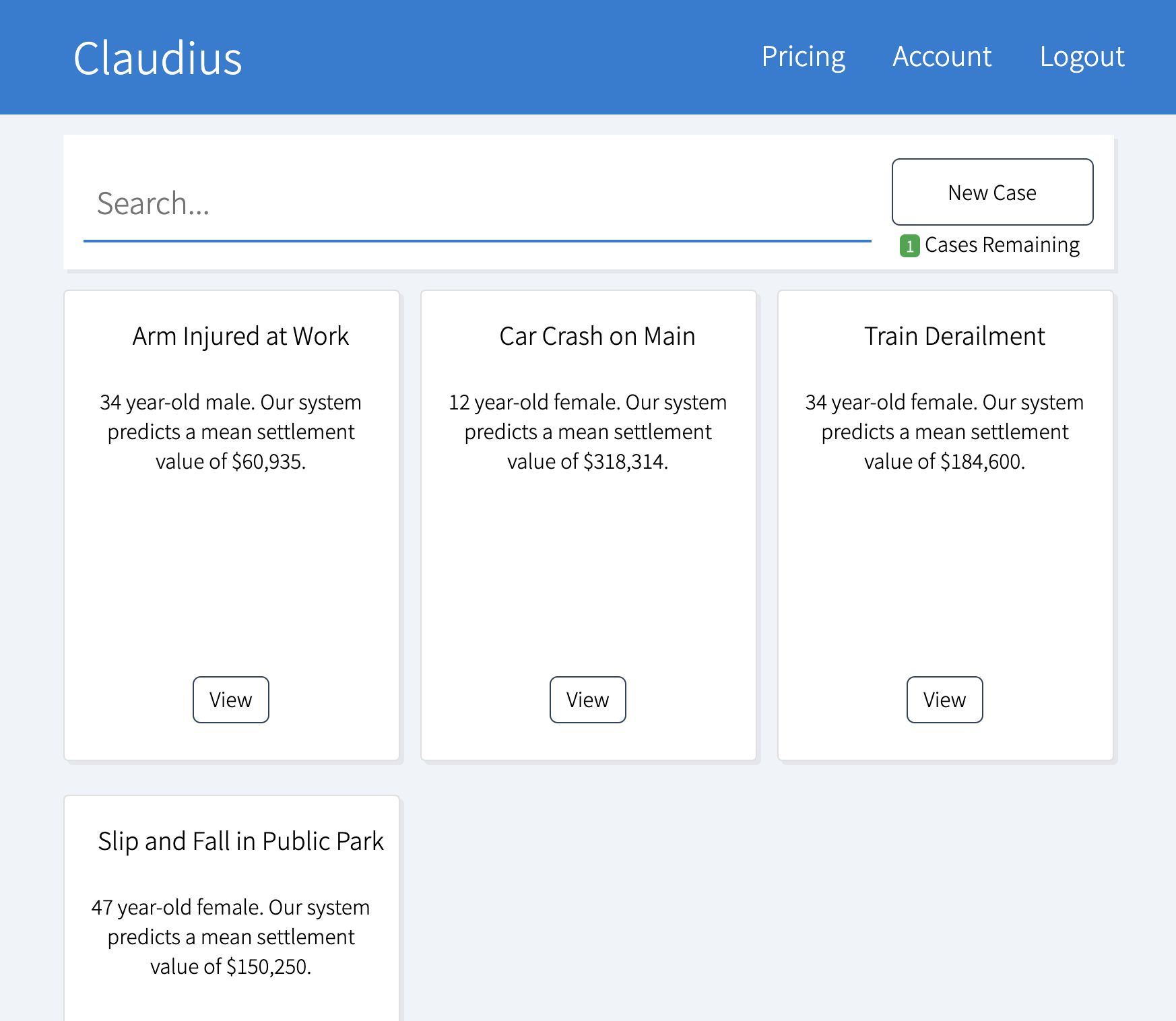

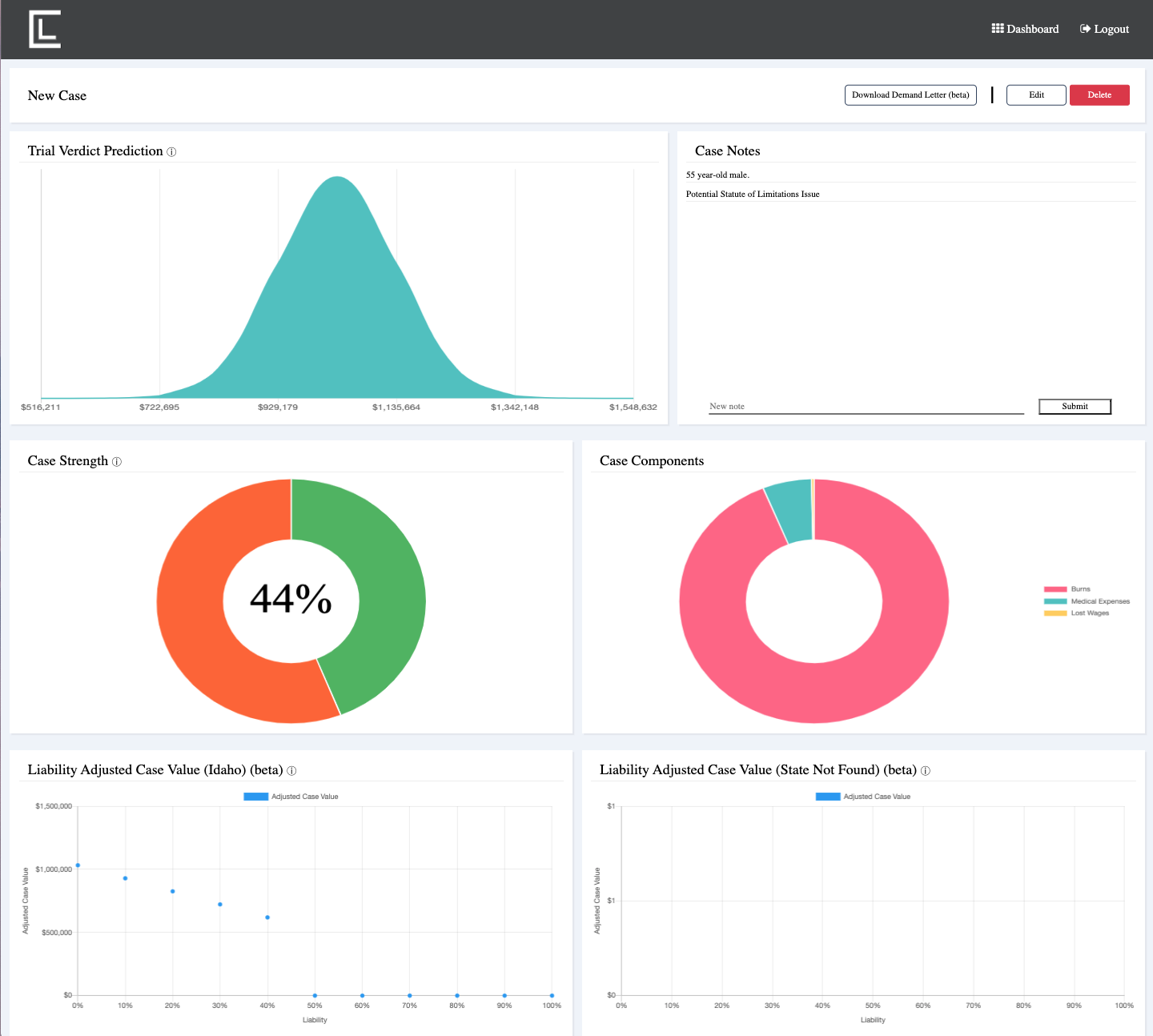

This summer I've been building Claudius, a legal tech startup where I serve as CTO.

Claudius leverages artificial intelligence, natural language processing, and machine

learning, to process new cases, identify the key legal issues, and produce data-backed

valuations as to what the cases are worth. As a result, case intake is more efficient (the process is

automated) and less costly (instead of legal assistants, paralegals, and attorneys, it's Claudius who

reviews new cases); early case assessment is more accurate, so attorneys can spend more time on the

cases that matter for their bottomline; and settlement is optimized. As a case progresses, attorneys

know precisely when to settle.

Claudius is a member company of the Princeton University Keller Center eLab Incubator and Accelerator

(2020) and was a member of the 2020 NSF Innovation Corps program.

I've learned in industry...

I'm an impact-focused software engineer thriving in a fast-paced, results-oriented, team atmosphere. I look to leverage my study of CV/DL and experience in industry to solve modern challenges with scalable solutions.

Software Engineering Intern

Tel Aviv, Israel

Aidoc is a medical-imaging startup that applies artificial intelligence to radiology to increase accuracy and speed of diagnosis- Delivered a Windows/Ubuntu security infrastructure that protected company devices stored onsite and offsite from direct-access attacks by employing an in-place encryption protocol

- Streamlined production-build deployments and minimized production downtime by building a Slackbot integrated with Azure Insights and Octopus

Software Engineering Intern

Holmdel, New Jersey

- Supported AT&T’s transition to a microservice-based system architecture by implementing and analyzing a containerized system leveraging Docker, Kubernetes, and Calico on a Unix OS

- Solved consistent local memory shortage problems by configuring a remote server for research and analysis of platforms used

...and in academia

At Princeton, I study Computer Science with a special focus in Computer Vision and Machine Learning. I've earned a 3.95 departmental GPA and a 3.65 cumulative GPA. My favorite technical courses have been:

- Computer Vision

- Advanced Computer Vision (Graduate Level)

- Computer Vision Independent Work (advised by Professor Jia Deng)

- Neural Networks: Theory and Application

- Probability and Stochastic Systems

- Principles of Computer System Design

- Reasoning About Computation

My favorite non-technical courses have been:

- Europe and the World (Senior-level seminar in Comparative Politics)

- Muslims and the Qur'an

- Literature and Medicine

- Princeton Humanities Sequence

My Projects

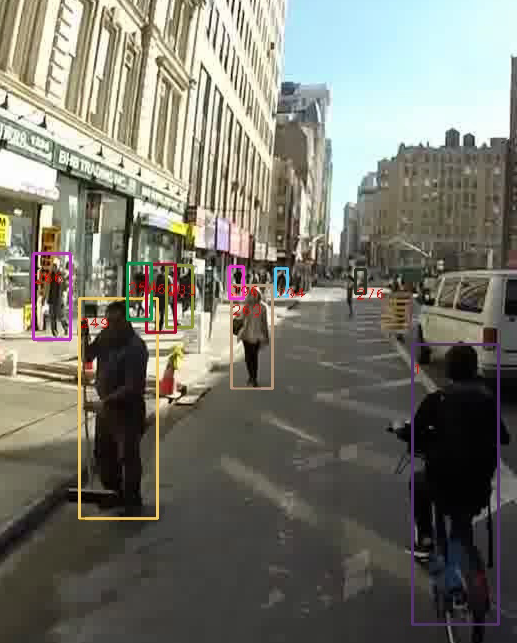

Single Shot Multiple Object Tracking and Segmentation

Paper

Multiple object tracking and segmentation (MOTS) is a new problem in the field of computer vision that combines multiple object tracking and pixel level instance segmentation. I proposed Single Shot MOTS, a joint detection, embedding, and segmentation system modeled off the work of Wang, et al. My system produced comparable results to the state of the art baseline system: Track R-CNN. I was able to achieve a runtime speed of 3.2 frames per second, which was a slight improvement on the runtime of Track R-CNN. I concluded that a joint detection, embedding, and segmentation system was capable of achieving comparable results to a two-part system such as TrackR-CNN.

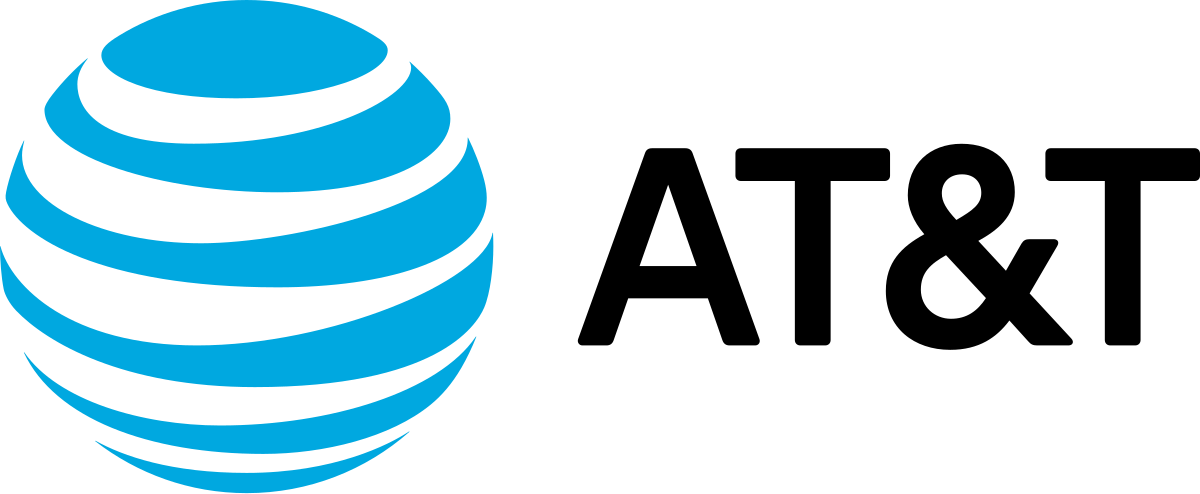

A Deeper Dive into Multi-Face Tracking in Unconstrained Videos

Paper | Poster

The problem of multi-object tracking is particularly interesting in the scope of videos containing multiple camera angle switches. I followed the approach of Lin and Hung in ”A Prior-Less Method for Multi-Face Tracking in Unconstrained Videos” to develop a prior-less multi-face tracking system for use in unconstrained videos. Results achieved with this system closely approximate the results of Lin and Hung. I then edited two components of the system as put forward by Lin and Hung, namely the tracklet generator and the feature extractor. Doing so allowed me to observe experimentally that the quality of the tracklet generator, and necessarily the face or body part detector, used in the system has the largest effect on the performance of the system. I concluded that the tracklet generator and associated object detector of a multiple object tracking system have the greatest impact on performance of that system.

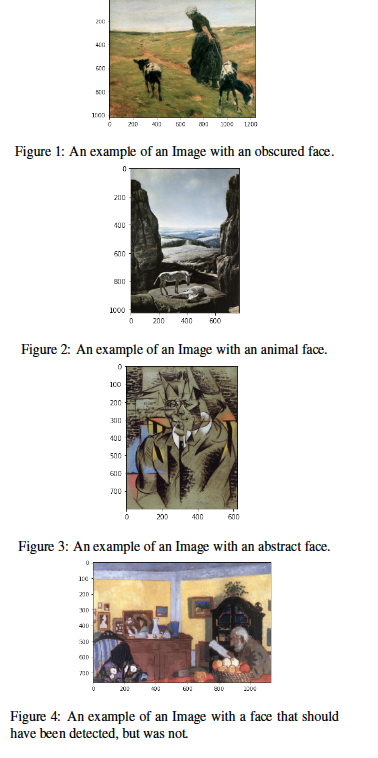

WikiArt Analysis Using Facial Emotion Analysis

Paper

This project compared the emotions expressed by the subjects of portraits to the emotions evoked by the artworks that they are in. I used the FER-2013 and WikiArt Emotions datasets to train a model to predict the emotions expressed by the subjects of a painting and compare these results with the emotions that painting evokes in its audience. While the emotions observed in the subjects by the audience may not always be the same as those the audience experiences while viewing a given painting, the patterns between the two are especially intriguing in order to better understand the relationship between sympathy, empathy, and the artistic merit of an artwork.

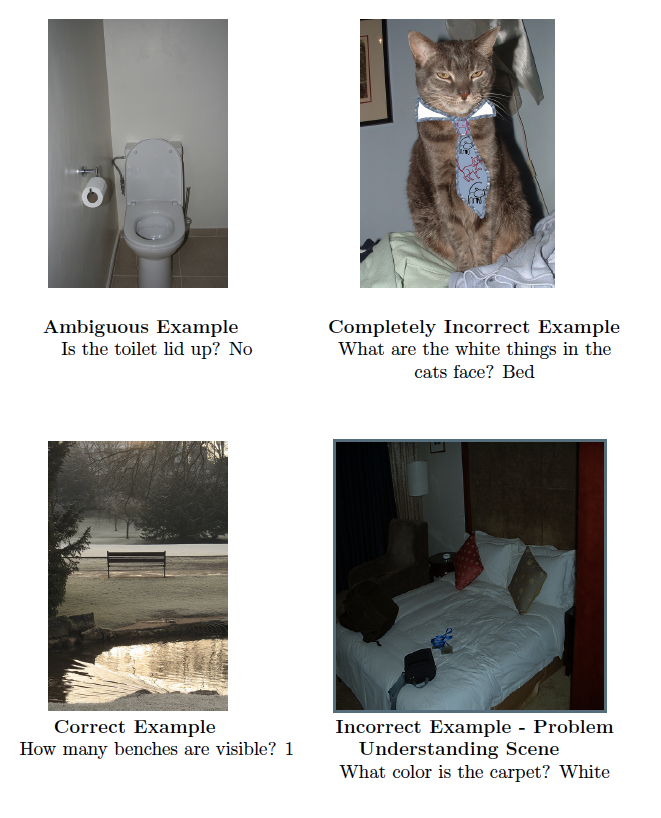

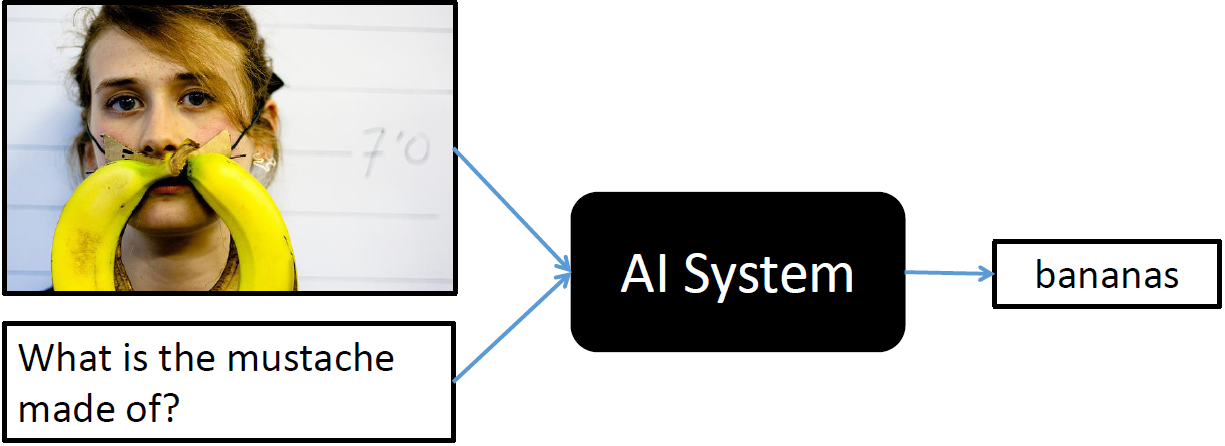

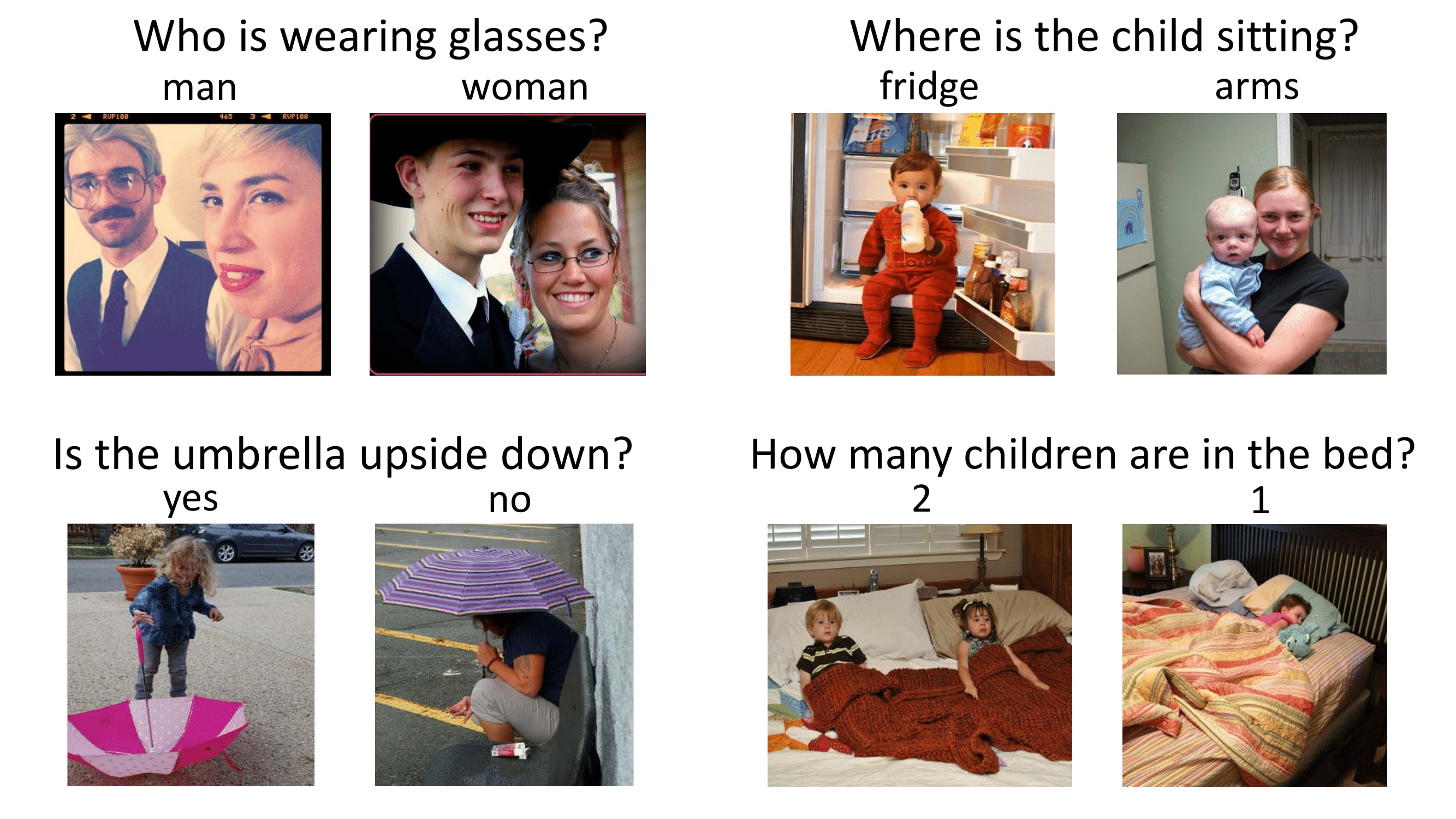

Visual Question Answering

VQA is a dataset containing open-ended questions about images. These questions require an understanding of vision, language and commonsense knowledge to answer. My implementation used an LSTM with two hidden layers to extract 1024-dimensional feature vectors from the questions. I used ResNet to extract 1024-dimensional feature vectors from the images. In line with the approach of Agrawal, et al., I elementwise multiplied the question and image feature vectors, and fed the product through a linear network. The network was trained to predict single-word answers from a set of 1000 common answers. I was able to achieve a 49.4% accuracy rate on the test set.